Effortlessly Scrape Product Data from any Shopify website

Seamlessly extract all product details from any Shopify-powered website, providing you with a comprehensive product data set.

We have done 90% of the work already!

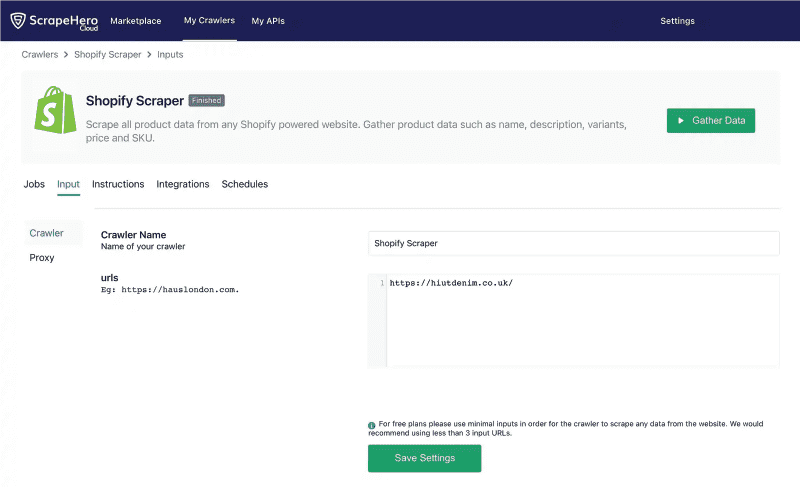

It's as easy as Copy and Paste

Start

Initiate your scraping process. Input the targeted Shopify store URL, choose your desired parameters, and click start to begin scraping.

Download

Download the data in Excel, CSV, or JSON formats. Link your Dropbox to store your data.

Schedule

Schedule crawlers hourly, daily, or weekly to get the latest product details on your Dropbox.

Sample Data

HIUT DENIM CO.

HAUS London

LTT store

Reddress

One of the most sophisticated Shopify scrapers available

- Scrape all Products from any Shopify e-commerece website.

- Extract details about all products including description, price, title and variants

- Covers 10+ distinct data points for each product

- Collect data periodically

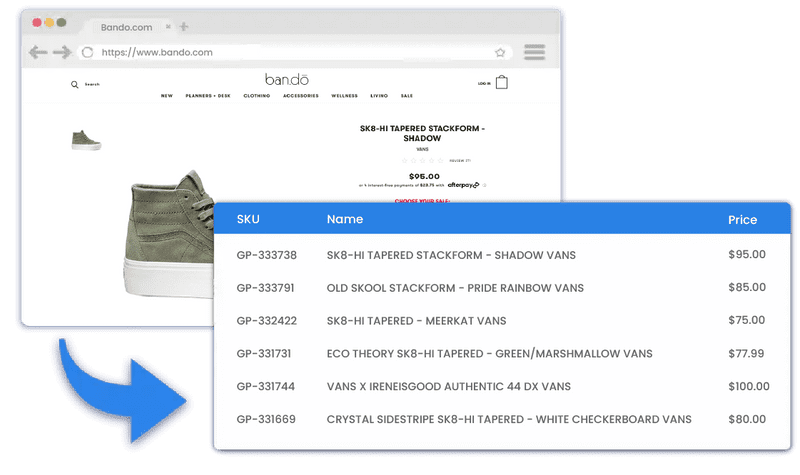

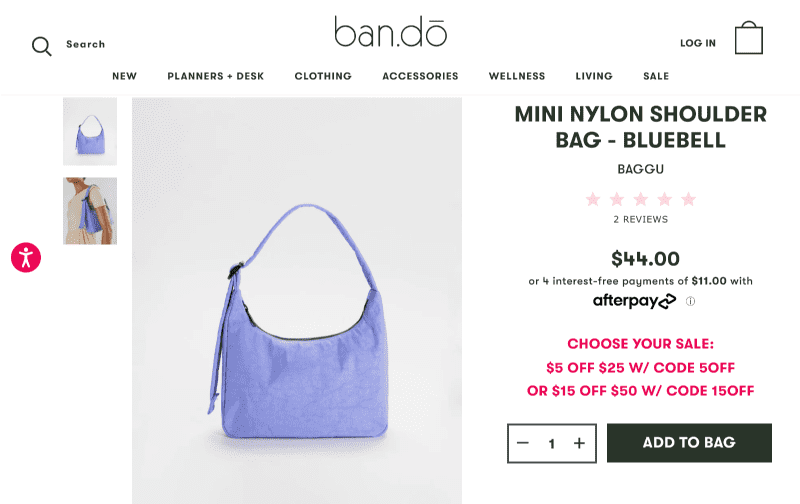

Extract all products from any Shopify website.

You can download all product data from any Shopify website.

Just provide the links to the Shopify website, and the scraper will get you the complete list of products in a spreadsheet.

Examples:

Pricing

Easy to use and Free to try

A few mouse clicks and copy/paste is all that it takes!

No coding required

Get data like the pros without knowing programming at all.

Support when you need it

The crawlers are easy to use, but we are here to help when you need help.

Extract data periodically

Schedule the crawlers to run hourly, daily, or weekly and get data delivered to your Dropbox.

Zero Maintenance

We will take care of all website structure changes and blocking from websites.

Frequently asked questions

Can I start with a one month plan?

All our plans require a subscription that renews monthly. If you only need to use our services for a month, you can subscribe to our service for one month and cancel your subscription in less than 30 days.

Why does the crawler crawl more pages than the total number of records it collected?

Some crawlers can collect multiple records from a single page, while others might need to go to 3 pages to get a single record. For example, our Amazon Bestsellers crawler collects 50 records from one page, while our Indeed crawler needs to go through a list of all jobs and then move into each job details page to get more data.

Can I get data periodically?

Yes. You can set up the crawler to run periodically by clicking and selecting your preferred schedule. You can schedule crawlers to run on a Monthly, Weekly, or Hourly interval.

Can you build me a custom API or custom crawler?

Sure, we can build custom solutions for you. Please contact our Sales team using this link, and that will get us started. In your message, please describe in detail what you require.

Will my IP address get blocked while scraping? Do I need to use a VPN?

No, We won't use your IP address to scrape the website. We'll use our proxies and get data for you. All you have to do is, provide the input and run the scraper.

What happens to my unused quotas at the end of each billing period?

All our Crawler page quotas and API quotas reset at the end of the billing period. Any unused credits do not carry over to the next billing period and also are nonrefundable. This is consistent with most software subscription services.

Can I get my page quota back because I made a mistake?

Unfortunately, we will not be able to provide you a refund/page-credits if you made a mistake.

Here are some common scenarios we have seen for quota refund requests

- If there are any issues with the website that you are trying to scrape

- Mistaken or accidental crawling (this also includes scenarios such as "I was unaware of page credits", "I accidentally pressed the start button")

- Providing unsupported URLs or providing the same or duplicate URLs that have already been crawled

- Duplicate data on the website

- No results on the website for the search queries/URLs

How to get geo-based results like product pricing, availability and delivery charges to a specific place?

Most sites will display product pricing, availability and delivery charges based on the user location. Our crawler uses locations from US states so that the pricing may vary. To get accurate results based on a location, please contact us.